In recent years, we've seen an immense increase in connected and smart devices, leading to a push of AI-technology from the cloud towards the edge, for both consumer and industrial applications. The reality is that edge devices and mobile platforms are often subject to constraints such as latency, memory, computational power and energy. Furthermore, applications such as process control and other critical or time-sensitive applications are often subject to strict constraints and requirements. To meet the ever-increasing demand for AI on the edge, novel methods are needed that enable powerful AI on resource-constrained devices.

To address this need, we research methods and tools to design, analyse, optimize and distribute machine learning algorithms to make optimal use of the available resources on the edge, fog and in the cloud, without compromising on their accuracy.

Research examples

- Resource analysis and distribution: on devices with limited resources we need to make sure that the tasks can be executed within the application and hardware constraints. This is especially the case for time and safety critical applications. Here, we use a hybrid analysis method that splits the application into small blocks, allowing us to estimate the worst case resource consumption in an efficient way. Using machine learning algorithms, we can also predict how much resources would be consumed on other platforms, using software and hardware features. If we have multiple devices at our disposal, we can use this block-based information to determine the optimal distribution of an application across multiple devices, taking into account multiple objectives at once. This can be done statically at deployment time, or dynamically at run time, using novel reinforcement learning methods.

- Component adaptation: A first approach to optimize neural networks for constrained devices is Neural Architecture Search. Here, an algorithm automatically searches optimal neural architectures for a specific task on a specific device, taking into account the hardware architecture and its limitations. Our research focuses on significantly reducing the time needed to find these fine-tuned architectures. An alternative approach is to compress the neural network by removing all excess information. Starting with an off the shelf solution, we identify which knowledge is relevant for a specific task and prune the unneeded connections of the neural network, lowering its computational requirements. This leads to a more resource efficient implementation, while maintaining the level of accuracy within a specific context.

- Neuro-inspired computing systems: in this track, we investigate 3rd generation neural network architectures inspired by new insights about the brain, such as spike encodings, different neuron types, synaptic plasticity mechanisms and novel topologies. Enabling on device adaptation and learning in noisy or changing environments with minimal compute and memory resources.

-

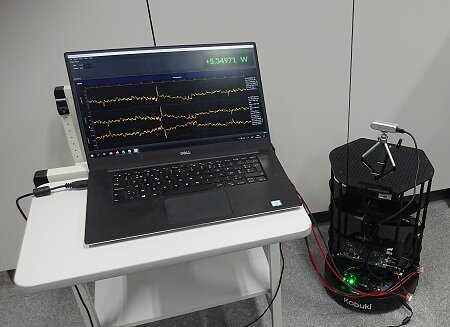

Transferring algorithms and models from a simulator to a real device is still challenging. Yet, at IDlab we have the experience of setting up experiments and testing our novel research in real-world settings.

Transferring algorithms and models from a simulator to a real device is still challenging. Yet, at IDlab we have the experience of setting up experiments and testing our novel research in real-world settings. -

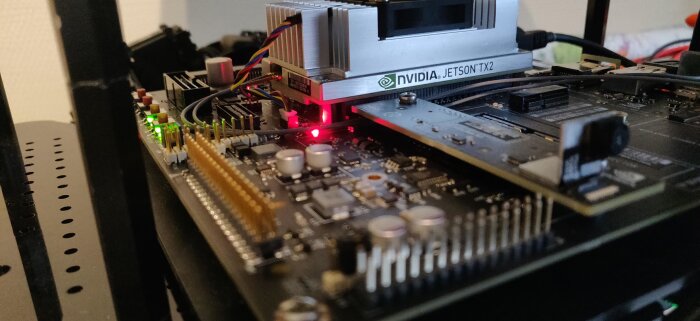

IDLab researches optimization methods of machine learning algorithms for resource constrained embedded devices.

IDLab researches optimization methods of machine learning algorithms for resource constrained embedded devices. -

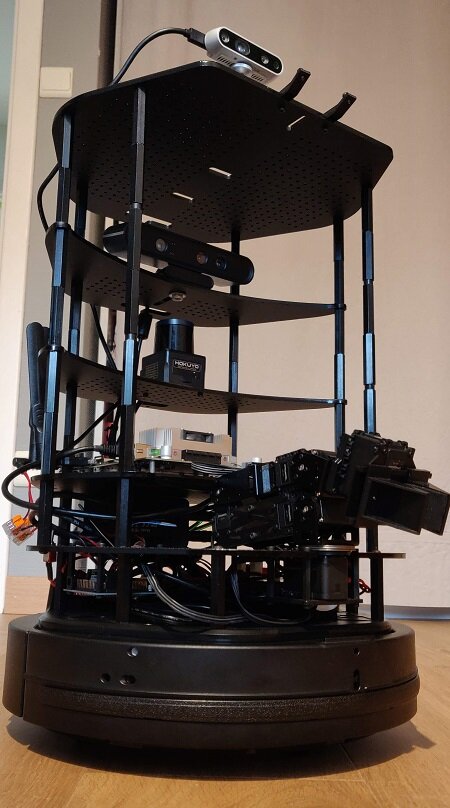

A close look at one of our robot platforms that is being used to validate our research in the real world. These platforms can be equipped with a large variety of sensors and actuators.

A close look at one of our robot platforms that is being used to validate our research in the real world. These platforms can be equipped with a large variety of sensors and actuators.